During my flyfishing vacation last year, pretty much nothing was happening on this blog. Now that I’ve migrated the blog to WordPress, I can actually schedule posts to appear when in fact I’m not even at the computer. I’m using this functionality to re-blog a few posts from the archives during the month of august while I’m away. This post is from May 8, 2009:

I was recently alerted to a group of theoretical publications which deal with the issue of apparent ‘noise’ in neuronal populations. The Nature Reviews Neuroscience article “Neural correlations, population coding and computation” by Bruno B. Averbeck, Peter E. Latham & Alexandre Pouget covers this area quite well.

Basically, the authors claim that the variability one can see when recording from the brain when the same stimulus is presented repeatedly is noise and must be detrimental for the tranmission of information and hence a problem the brain must solve:

Part of the difficulty in understanding population coding is that neurons are noisy: the same pattern of activity never occurs twice, even when the same stimulus is presented. Because of this noise, population coding is necessarily probabilistic. If one is given a single noisy population response, it is impossible to know exactly what stimulus occurred. Instead, the brain must compute some estimate of the stimulus (its best guess, for example), or perhaps a probability distribution over stimuli.

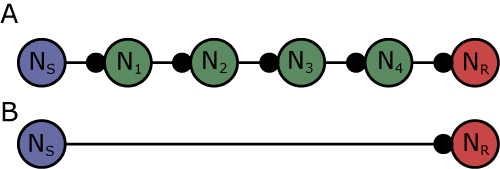

It needs to be noted that the authors do not refer to sensory neurons, which code sensory information with great precision. Instead, they look at neurons deep in the mammalian brain, many synapses away from the primary sensory afferents. What I don’t understand from their article is why this should be considered ‘noise’. Obviously, if high fidelity between the site of sensory input and the site of recording were required, there would be a single axon going there, and not via many syapses. Synapses are time consuming and energetically expensive. During development, unused synapses are being pruned throughout the brain. Thus, the variability must reflect some computation which takes place in the synapses from the site of sensory input to the site of recording. Let me illustrate this with a picture:

Of course, if one records from neuron NR and stimulates sensory neuron NS, as in A, there is a lot of processing going on in the synapses along N1-4. This is happening even without any external input into the single conveyor belt of information. Of course, there never is such a conveyor belt, that idea is already misleading. After the very first synapse (from wherever you start), there are always inputs from other sites, feed-forward and feedback connections, etc. But even in this simplest model of information transmission, every synapse is a computational component and not just a link between neurons adding variability to the sensory signal for no reason. These synapses would not be there if this processing was not some important brain function. This is illustrated in B: if simple and reliable information transmission from NS to NR were important, there would only be one single axon from NS to NR, without any additional processing.

I would really like to hear good arguments as to why the recorded variability should be ‘noise’ and a problem for information coding, rather than a reflection of the brain doing what it’s supposed to do: finding out what the best action is under the current circumstances.

Averbeck, B., Latham, P., & Pouget, A. (2006). Neural correlations, population coding and computation Nature Reviews Neuroscience, 7 (5), 358-366 DOI: 10.1038/nrn1888