tl;dr: So far, I can’t see any principal difference between our three kinds of intellectual output: software, data and texts.

I admit I’m somewhat surprised that there appears to be a need to write this post in 2014. After all, this is not really the dawn of the digital age any more. Be that as it may, it is now March 6, 2014, six days since PLoS’s ‘revolutionary’ data sharing policy was revealed and only few people seem to observe the irony of avid social media participants pretending it’s still 1982. For the uninitiated, just skim Twitter’s #PLoSfail, read Edmund Hart’s post or see Terry McGlynn’s post for some examples. I’ll try to refrain from reiterating any arguments made there already.

Colloquially speaking, one could describe the scientific method somewhat shoddily as making an observation, making sense of the observation and presenting the outcomes to interested audiences in some version of language. Since the development of the scientific method somewhere between the 16th century and now, this is roughly how science has progressed. Before the digital age,it was relatively difficult to let everybody who was interested participate in the observations. Today, this is much easier. It still varies tremendously between fields, obviously, but compared to before, it’s a world’s difference. Today, you could say that scientists collect data, evaluate the data and then present the result in a scientific paper.

Data collection can either be done by hand or more or less automatically. It may take years to sample wildlife in the rainforest and minutes to evaluate the data on a spreadsheet. It may take decades to develop a method and then seconds to collect the data. It may take a few hours to generate the data first by hand and then by automated processing, but decades before someone else comes up with the right way to analyze and evaluate the data. What all scientific processes today have in common is that at some point, the data becomes digitized, either by commercial software or by code written precisely or that purpose. Perhaps not in all, but surely in the vast majority of quantitative sciences, the data is then evaluated using either commercial or hand-coded software, be it for statistical testing, visualization, modeling or parameter/feature extraction, etc. Only after all this is done and understood does someone sit down and attempts to put the outcome of this process into words that scientists not involved in the work can make sense of.

Until about a quarter of a century ago, essentially all that was left of the scientific process above were some instruments used to make the observations and the text accounts of them. Ok, maybe some paper records and later photographs. With a delay of about 25 years, the scientific community is now slowly awakening to the realization that the digitization of science would actually allow us to preserve the scientific process much more comprehensively. Besides being a boon for historians, reviewers, committees investigating scientific misconduct or the critical public, preserving this process promises the added benefit of being able to reward not only those few whose marketing skills surpass the average enough to manage publishing their texts in glamorous magazines, but also those who excel at scientific coding or data collection. For the first time in human history, we may even have a shot at starting to think about developing software agents that can trawl data for testable hypotheses no human could ever come up with – proofs of concepts already exist. There is even the potential to alert colleagues to problems with their data, use the code for purposes the original author did not dream of or extract parameters from data the experimentalist had not the skill to do. In short, the advantages are too many to list and reach far beyond science itself.

Much like the after the previous hypothetical requirement of proofs for mathematical theorems, or the equally hypothetical requirement of statistics and sound methods, there is again resistance from the more conservative sections of the scientific community for largely the same 10 reasons, subsumed by: “it’s too much work and it’s against my own interests”.

I can sympathize with this general objection as making code and data available is more work and does facilitate scooping. However, the same can be said of publishing traditional texts: it is a lot of work that takes time away from experiments and opens all the flood gates of others making a career on the back of your work. Thus, any consequential proponent of “it’s too much work and it’s against my own interests” ought to resist text publications with just as much fervor as they resist publishing data and code. The same arguments apply.

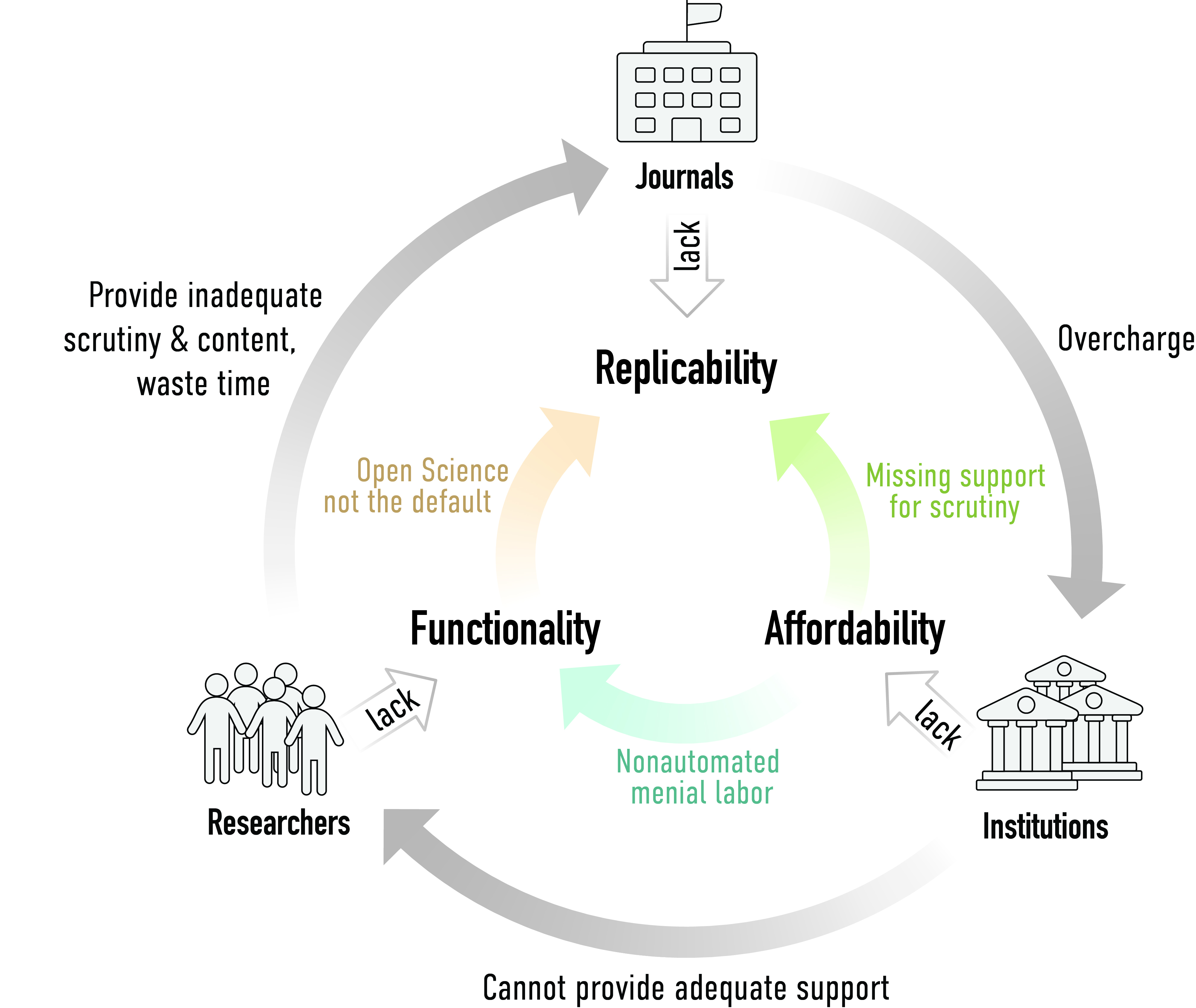

Such principles aside, in practice, of course our general infrastructure makes it much too difficult to publish either text, data or software, which is so many of us now spend so much time and effort on publishing reform and why our lab in particular is developing ways to improve this infrastructure. But that, as well, does also not differ between software, data and science: our digital infrastructure is dysfunctional, period.

Neither does making your data and software available make you particularly more liable to scooping or exploitation than the publication of your texts does. The risks vary dramatically from field to field and from person to person and are impossible to quantify. Obviously, just as with text publications, data and code must be cited appropriately.

There may be instances where the person writing the code or collecting the data already knows what they want to do with the code/data next, but this will of course take time and someone less gifted with ideas may be on the hunt for an easy text publication. In such (rare?) cases, I think it would be a practical solution to implement a limited provision on the published data/code stating the precise nature of the planned research and the time-frame within which it must be concluded. Because of its digital nature, any violation of said provisions would be easily detectable. The careers of our junior colleagues need to be protected and any publishing policy on text, data or software ought to strive towards maximizing such protections without hazarding the entire scientific enterprise. Also here no difference between text,data and software.

Finally, one might make a reasonable case that the rewards are stacked disproportionately in favor of text publications, in particular with regard to publications in certain journals. However, it almost goes without saying that it is also unrealistic to expect tenure committees and grant evaluators to assess software and data contributions before anybody even is contributing and sharing data or code. Obviously, in order to be able to reward coders and experimentalists just as we reward the Glam hunters, we first need something to reward them for. That being said, in today’s antiquated system it is certainly a wise strategy to prioritize Glam publications over code and data publications – but without preventing change for the better in the process. This is obviously a chicken-and-egg situation which is not solved by the involved parties waiting for each other. Change needs to happen on both sides if any change is to happen.

To sum it up: our intellectual output today manifests itself in code, data and text. All three are complementary and contribute equally to science. All three expose our innermost thoughts and ideas to the public, all three make us vulnerable to exploitation. All three require diligence, time, work and frustration tolerance. All three constitute the fruits of our labor, often our most cherished outcome of passion and dedication. It is almost an insult to the coders and experimentalists out there that these fruits should remain locked up and decay any longer. At the very least, any opponent to code and data sharing ought to consequentially also oppose text publications for exactly the same reasons. We are already 25 years late to making our CVs contain code, data and text sections under the “original research” heading. I see no reason why we should be rewarding Glam-hunting marketers any longer.

UPDATE: I was just alerted to an important and relevant distinction between text, data and code: file extension. Herewith duly noted.

There’s one more distinction, more relevant than file extensions but still unrelated to the point you make: legal issues. Code usually comes with a license in addition to a copyright statement, because it can be used in addition to being copied. No need to convince me that this distinction is fuzzy, I fully agree, but that doesn’t change the law. In principle, I need my employer’s consent to publish code, wheras I can publish a paper without asking for permission. This doesn’t seem to be a big issue in practice yet, but I suspect that this will change once scientists do start to publish code massively. Is there a way we could prepare for this?

Hmm, these days, most papers also come with licenses, e.g. most of my recent papers are published CC-BY or something like that. I try to make other material available also as either CC-BY or CC0. My employer (a university) doesn’t care whether or how I publish code we generate.

Good question on how to prepare for legal issues concerning code/data publishing. I guess some “best practice” guidelines ought to be developed, but I’m fairly certain that these already exist. Other than that, awareness needs to be raised such that our code doesn’t end up locked behind paywalls…

Everything’s called a license, but CC licenses are about copying and (partial) republishing only, whereas code licenses (GPL, BSD, …) are also about transforming and running the code. I am aware of a couple of courses on this topic written in French and concerning French law (https://www.projet-plume.org/fr/ressource/du-patrimoine-culturel-a-la-production-scientifique-aspects-juridiques, https://www.projet-plume.org/fr/ressource/cours-droit-des-logiciels), but I suspect the situation is quite similar at least in most of Europe.

What I think we should aim for in the long run is the recognition of scientific software as science rather than as software. There should be no difference at all, legally speaking, between a paper and software source code.

Hmm, you think GPL might not be a good license for scientific software? I completely agree that text, data and software deserve equal treatment as scientific output, but I’m not expert enough on the different form of intellectual property legislation to even begin to make a competent statement on which licenses ought to be used for which of the three, if the CC licenses are not applicable to all of them. Before your comment, I was thinking that CC-BY and CC0 would be fine for all three…

The problem is not so much the content of the license, GPL or BSD both are fine with me. It’s the fact that the licensing decision is not up to the scientists, but to the lawyers of the institutions they work for. Like most of my colleagues, I never ask for permission to publish software under an Open Source license, and until now this works fine because the cases are so few. If I did ask, I am pretty sure I’d be asked to wait until my employer has reached a decision, which might well take a few months. There are no such restrictions to publishing text and data, which scientists are allowed to publish without permission in most academic institutions.

Comments are closed.