In the last “Science Weekly” podcast from the Guardian, the topic was retractions. At about 20:29 into the episode, Hannah Devlin asked, whether the reason ‘top’ journals retract more articles may be because of increased scrutiny there.

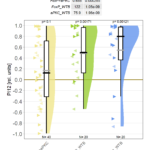

The underlying assumption is very reasonable, as many more eyes see each paper in such journals and the motivation to shoot down such high-profile papers might also be higher. However, the question has actually been addressed in the scientific literature and the data don’t seem to support this assumption. For one, this figure shows that there are a lot of retractions from lower ranking journals, but the journals who retract a lot are few and far between. In fact, there are many more retractions in low-ranking journals than in high-ranking ones. Of the high-ranking journals, a much larger proportion also retracts many papers. However, this analysis only shows that there are many more retractions in lower journals than in higher journals on an absolute level. Hence, these data are not conclusive, but suggestive that scrutiny is not really all that much higher for the ‘top’ journals than anywhere else.

Another reason why scrutiny might be assumed to be higher in ‘top’ journals is that readership is higher, leading to more potential for error detection. However, the same reasoning can be applied to citations, and not only retractions. Moreover, citing a ‘top’ paper is not only easier than forcing a retraction, it also benefits your own research by elevating the perceived importance of your field. Thus, if readership had any such influence, one would expect journal rank to correlate better with citations than with retractions. The opposite is the case: The coefficient of determination for citations with journal rank currently lies around 0.2, while that coefficient comes to lie at just under 0.8 for retractions and journal rank (Fig. 3 and Fig. 1D, respectively, here). So while there may be a small effect of scrutiny/motivation, the evidence seems to suggest that it is a relatively minor effect, if there is one at all.

Conversely, there is quite solid evidence that the methodology in ‘top’ journals is not any better than in other journals, when analyzing non-retracted articles. In fact, there are studies showing that the methodology is actually worse in ‘top’ journals, while we have not found a single study suggesting the methodology gets better with journal rank. Our article reviews these studies. Importantly, these studies all concern non-retracted papers, i.e., the other 99.95% of the literature.

In conclusion, the evidence suggests scrutiny is likely a negligible factor in the correlation of journal rank and retractions, while increased incidence of fraud and lower methodological standards can be shown.

I know Ivan Oransky, who was a guest on the show, is aware of these data, so it may have been a bit unfortunate that Phillip Campbell (editor-in-chief at Nature Magazine) got to answer this question before Ivan had a chance to lay these data out. In fact, Nature is also aware of these data and has twice refused publishing them. The first time when we submitted our manuscript, with the statement, that Nature had already published articles that stated that Nature publishes the worst science. The second time was when Cori Lok interviewed Jon Tennant and he told her about the data, but Cori failed to include this part of the interview. There is thus a record of Nature, very understandably, avoiding to admit their failure to select for solid science. Phillip Campbell’s answer to the question in the podcast may have been at least the third time.

While Phillip Campbell did admit they don’t do enough fraud-detection (it is too rare), the issue of reliability in science goes far beyond fraud, so successfully derailing the question towards this direction served his company quite well. Clearly, he’s a clever guy and did not come unprepared.

Finally, one may ask: why do the ‘top’ journals publish unreliable science?

Probably the most important factor is that they attract “too good to be true” results, but only apply “peer-review light”: rejection rates drop dramatically from 92% to a mere 60% once your manuscript makes it past the editors, that’s a 5-fold increase in your publication chances (Noah Gray and Henry Gee, pers. comm.). Why is that so? First, the reviewers know the editor wants to publish this paper. Second, they have an automatic conflict of interest, as a Nature paper in their field increases the visibility of their field, they may even be cited in the paper – or plan to cite it in their upcoming grant application.

On average, this entire model is just a recipe for disaster and more policing won’t fix it. By using it, we have been setting us up for the exponential rise in retractions to be seen in Fig. 1a of our paper.

So, in the probably not too unlikely case that the topic of unreliable science should come up again, anyone can now cite the actual, peer-reviewed data we have at hand, such that editors-in-chief may have a harder time derailing the discussion and obfuscating the issues in the future.

tl;dr: The data suggest a combination of three factors leading to more retractions in ‘top’ journals: 1. Worse methodological quality; 2. Higher incidence of fraud 3. Peer-review light. One would intuitively expect increased readership/scrutiny to play some role, but there is currently no evidence for it and some circumstantial evidence against it.

Comments are closed.