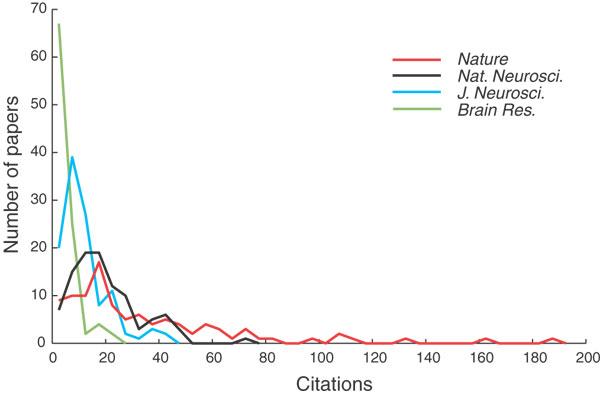

In what area of scholarship are repeated replications of always the same experiment every time published and then received with surprise, only to immediately be completely ignored until the next study? Point in case from an area that ought to be relevant to almost every single scientist on the planet: research evaluation. The first graph I know to show the left-skewed distribution of citation data is from 1997:

PO Seglen, the author of above paper, concludes his analysis with the insight “the journal cannot in any way be taken as representative of the article”.

In our paper reviewing the evidence on journal rank, we count a total of six subsequent (and one prior, in 1992) publications that present the left-skewed nature of citation data in one way or another. In other words, this is an established and often-reproduced fact that citation data are left-skewed. This distribution of course entails that representing it by the arithmetic mean is a mistake that would make an undergraduate student fail their course. Adding to the already long list of replications is Nature Neuroscience, home of the most novel and surprising neuroscience with this ‘unexpected’ graph:

Only this time, the authors are not surprised, appropriately cite PO Seglen’s 1997 paper and acknowledge that of course this finding is nothing new: “reinforcing the point that a journal’s IF (an arithmetic mean) is almost useless as a predictor of the likely citations to any particular paper in that journal”. Kudos, Nature Neuroscience editors!

What puzzles me just as much as the authors and what prompted me to write this post is their last sentence:

Journal impact factors cannot be used to quantify the importance of individual papers or the credit due to their authors, and one of the minor mysteries of our time is why so many scientifically sophisticated people give so much credence to a procedure that is so obviously flawed.

In which other area of study does it take decades and countless replications before a basic fact is generally accepted? Could it be that we scientists, perhaps, are not as scientifically sophisticated as we’d like to see ourselves? Aren’t we, perhaps, equally dogmatic, lazy, stubborn and willfully ignorant as any other random person from the street? What does this persistent resistance to education say about the scientific community at large? Is this not an indictment of the gravest sort as to how the scientific community governs itself?

Good post, reminds me about the article We have met the enemy and it is us by Mark Johnston. However, the blame is on the academic managers, more than on the whole community.

In my experience, the blame is on us scientists just as much: http://blogarchive.brembs.net/comment-n911.html

You are right. But we should look for constructive ways to change this. One of them is to blame publicly the academic leaders which enforce (by their management decisions) this fashion, as well as to publicly blame those among them which have a publicly passive opinion about this, but their behaviour endorse this fashion (for example by being members of pro-legacy publishers organizations, web sites, by staying in committees and doing nothing about this, etc).

Why blame the leaders and not everybody? Because when it goes well the leader has the merit and when it goes bad the leader has the blame, that’s how the leader thing works.

Nice post, Björn. Even though I fully agree with you that IF has no prediction value on how many citados a paper will have, I can’t stop thinking that there is a symbolic value of publishing in high-ranked journals. Scientists naturally seek signs of status. I don’t think this is wrong in principle, although irrational. It is just human.

I’m not sure I can tell if you’re joking or are being serious 🙂

You’re not really trying to say that it is ok to be a little irrational in the way we kick people out of science or promote them to professors?

Will go and read your blog post now, just to see what I’m missing.

Comments are closed.